Two Years In

Sergey Bykov

I’ve wanted to publish an update for quite some time on how things are going at Temporal. I’ve had all the right excuses at my disposal for not doing it—“I’m just too busy while we are finishing X”; “we are just about to announce Y”; “I need this the time right now to help my son with physics, after that I’ll have more time”; “after I return from this trip”; etc. Excuses are only excuses after all. If only I could write like Colin Breck, I would do it more often. Another excuse.

When I joined Temporal in September 2020, our team consisted of 13 people, almost all engineers. Now the number is 116 (at least last I heard). We have several distinct teams within engineering: Server, SDK, Cloud, Infra, Developer Tools, Security, and a “10x” team. “10x” is not the actual name of the team; I just used this moniker to reflect what the team’s been focusing on without revealing its internal name.

Engineering is not the only game in town anymore. We built several other critical functions and staffed them with excellent specialists. A Go To Market team with Sales and Solution Architects, Technical Writing and Education, Customer Success, Recruiting, Finance, Product, and Design teams.

We also happened to raise our B round in December 2021 on great terms, just three weeks before the VC market changed dramatically. I have a very limited understanding of this part of business, but it seems we had timed the market very well. We are actively hiring to accelerate investments across multiple areas. In the current market environment, sometimes this feels a little bit surreal.

Remote

When I joined, Temporal was a primarily local company, with a plan to get back to office after the pandemic came to an end. A couple of months later, we realized that the desire to stay local was significantly limiting our ability to hire. We decided to become a fully remote company. That immediately unlocked access to great hires outside of the Seattle area. We hired people in the Bay Area, East Coast, Midwest, Colorado, Texas, Florida, and Canada. However, the company is still very skewed toward the West Coast in general and the Seattle area in particular, especially on the engineering side.

Such a geographical makeup of the company presents an interesting challenge in terms of how to collaborate effectively. The original desire for being local was not based on convenience, but to allow for high-throughput face-to-face discussions. Sometimes a two-hour whiteboarding session is more productive than a month-long series of Zoom calls and Slack threads. Unfortunately, flying people across the continent for a day or two of discussion is a significant toll on them, with family inconvenience, travel time, and jet lag. The trickiest situation is when there’s a local “majority” that can easily meet in person and a few “remote” team members who can’t. This creates an inherent counterproductive split.

We are still trying to figure this out. We don’t buy the simplistic recipes like “all meetings have to be remote” and “just need to write everything down,” at least for the current state of the company. Especially for some of the deep technical problems we are trying to solve. I would love to learn how other companies dealt with this challenge. Should we choose a hub-and-spoke model with an HQ and “remote” employees traveling to it? Should we build a small number of hubs in key areas? How many? Seattle, Bay Area, Boston, Denver?

Collaboration Tools

We’ve been fairly open-minded about using a number of collaboration tools with overlapping functionalities. As the company grew, some of the tools phased out, some stayed but changed their role, some new ones entered the fold.

Slack continues to be the #1 collaboration tool. The introduction of huddles helped it to take over a significant fraction of Zoom calls for quick chats originating from Slack threads. On-call incident conversations happen primarily in huddles these days. Discord died out as a pseudo-office chat tool around the same time huddles were added to Slack.

Zoom is the main tool for scheduled meetings and most external calls. Go To Market teams use Gong for recording and transcribing Zoom calls, which is very handy for skimming through content of calls you weren’t part of, or for finding a particular important moment in an hour-long conversation.

Notion is our key information holding system. I keep saying it deserves its own blog post. One of these days. The combination of easy-to-use wiki-like document creation and editing functionality with the ease of adding ad hoc databases/tables has proven to be great for putting structured (data) and unstructured (text) information together. However, a consistent complain about Notion is its search capabilities. Personally, I find its search good enough for me. But most people don’t.

We previously used Notion for task tracking and sprint planning (just another database), but we recently switched to Jira for that. The highest level of planning, the roadmaps, stayed in Notion though. Notion has connectors for Jira and GitHub, which makes such integrations possible. But I can see how many people would prefer to stay within one tool and use either Jira or GitHub projects for all of their planning and tracking.

Other Tools

GitHub Actions is our preferred CICD mechanism today. Before I joined, CICD pipelines were run using Concourse for Server and SDKs. We are gradually moving away from Concourse because GitHub Actions proved to be easier to manage. At the same time, we are working on leveraging Temporal Workflows for pipeline orchestration. This is not for ideological reasons, but simply because our engineers find it a lot more convenient to look at Workflow histories than digging through flat logs. Support for failure handling and automatic retries is an obvious strength of Temporal. We are looking to open-source this framework when it takes its mostly final shape. Ironically, GitHub Action orchestrations are called “workflows” as well, confusingly enough.

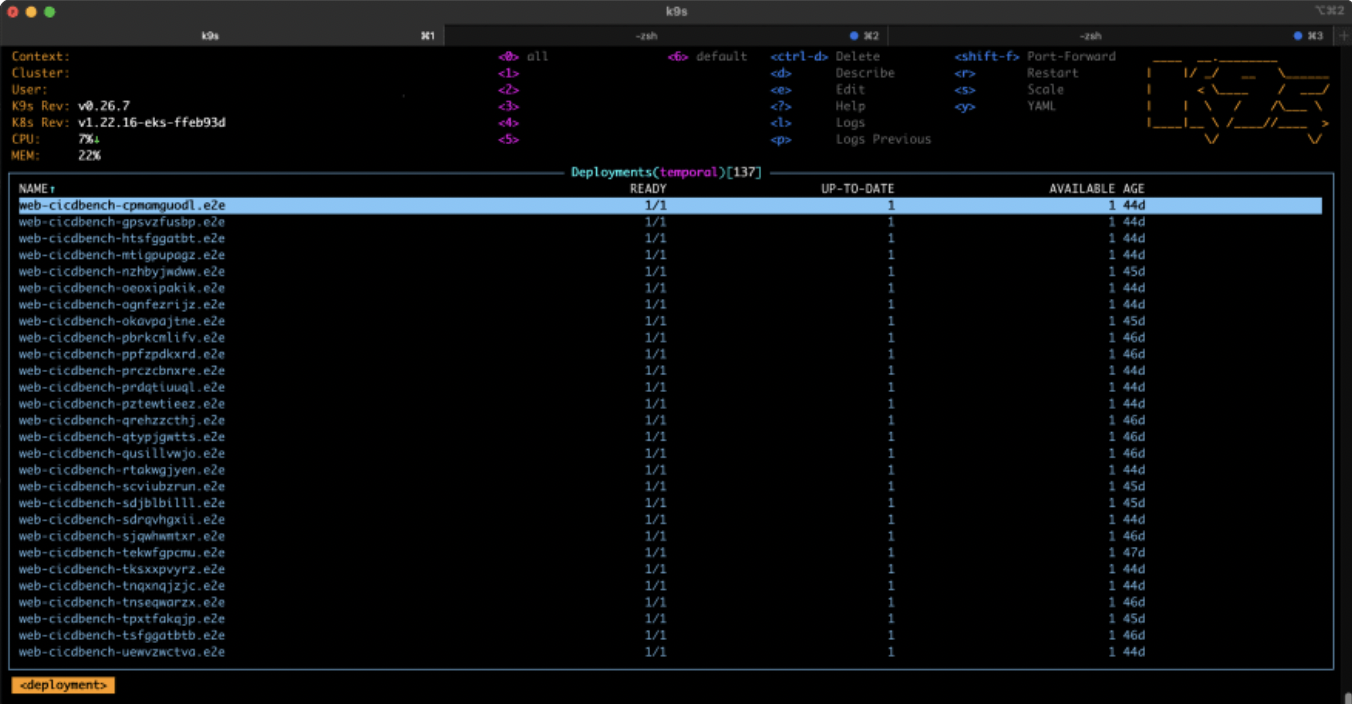

k9s is a clear favorite when it comes to interactive operations on Kubernetes clusters. It’s primarily used while on call, to monitor things and sometimes to make an immediate ephemeral change. Some people still prefer to use kubectl or VS Code instead.

Cloud

By early 2021 we had a handful of paying customers, whom we called design partners. The rationale was that even back then Temporal Server was a mature product, having already been used at scale for several years by Uber and others for production for mission-critical applications, in the form of Cadence. Hence, it was a very solid core for the Data Plane.

By early 2021 we had a handful of paying customers, whom we called design partners. The rationale was that even back then Temporal Server was a mature product, having already been used at scale for several years by Uber and others for production for mission-critical applications, in the form of Cadence. Hence, it was a very solid core for the Data Plane.

We started by deploying several Capacity Units of the Data Plane, which we unoriginally call Cells, in a barely automated way, with a combination of scripts and manual operations. This unlocked monetization for the product before the Control Plane automation was put in place. The downside was that we had to support production Cells, including being on call, without sufficient tooling to perform routine operations. Therefore, the operational burden was taking engineering cycles away from development. The benefit of this setup is that it was constantly keeping us honest—any new Data Plane or Control Plane feature had to be production ready from the start. We would also receive immediate feedback from the design partners on any and all changes. I think this was the right tradeoff that helped us to keep the quality bar high and to avoid building esoteric features.

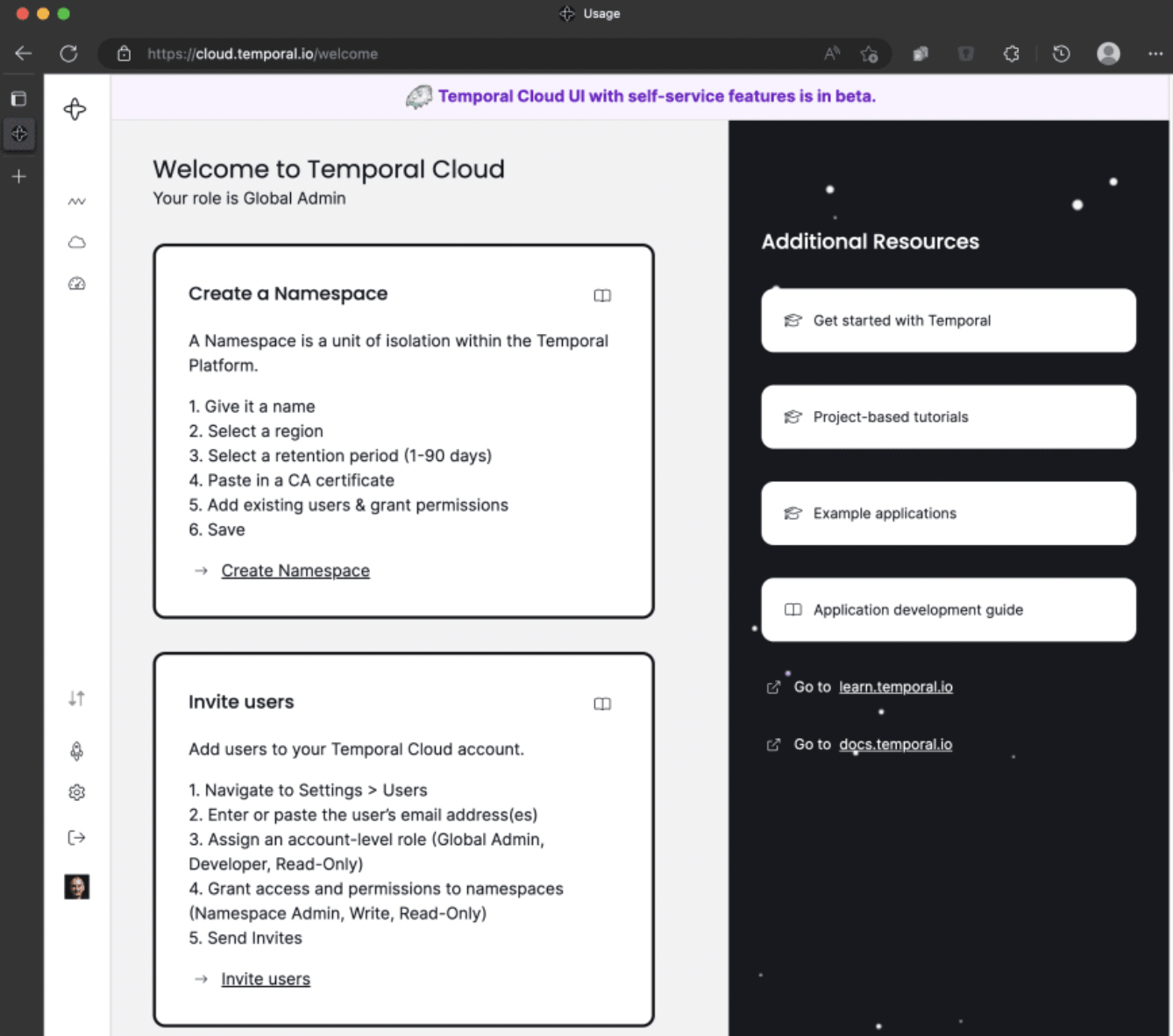

For a long while, our customers had to file support tickets for any changes to configuration of their Cloud Namespaces: creating a Namespace, updating certificates, inviting users, and so on. That meant the on-call engineer was required to make such changes on the customer’s behalf. This very manual process was a bottleneck for sales because we weren’t ready to take a large number of customers that we’d have to support via such an unscalable process. This meant we had to be very strategic about which customers to take.

Last May we set for ourselves the goal of providing a full self-serve experience. In general, as a company we avoid date-driven releases. In this case not only did we set a target date of early October, we also put a forcing function upon ourselves—we decided that we would announce availability of Temporal Cloud at the inaugural Replay conference in August. A lot of hard work happened between May and October to deliver on the promise. We ended up slipping on a couple of minor features, but otherwise delivered https://cloud.temporal.io/. With the self-serve functionality in place, we were able to quickly process the backlog of companies that had been waiting “in line” to get to Temporal Cloud and started taking new customers in a real-time fashion.

Control Plane

Each Cell in Temporal Cloud is a composition of several compute clusters, one or more databases, Elastic Search, ingress, observability stack, and other dependency components. As one would expect, we needed a Control Plane that would manage provisioning such resources, deployment of software and configuration changes to Cells, monitoring, alerting, and handling certain classes of failures.

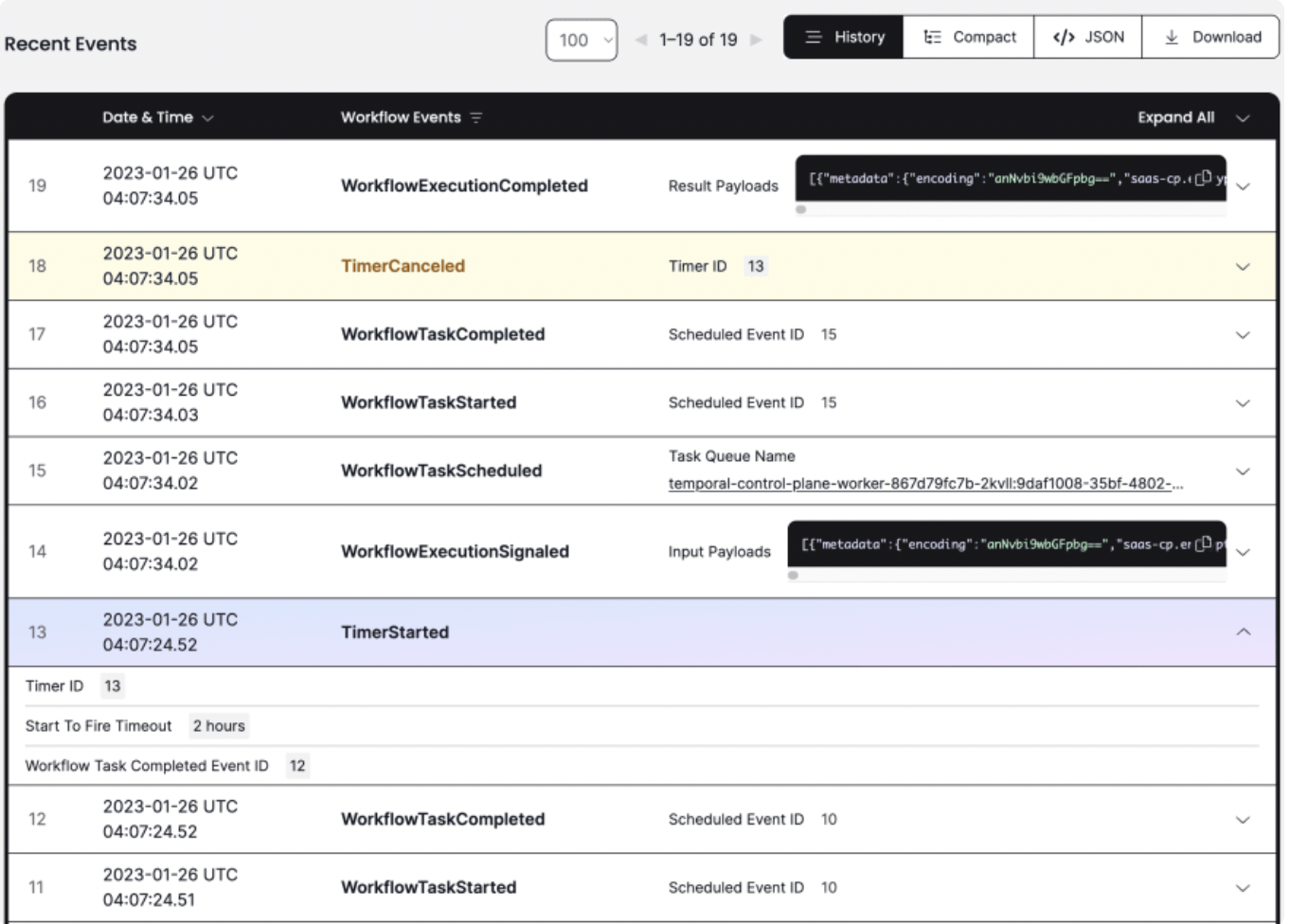

We chose to leverage Temporal for building the Control Plane. This decision was not made for ideological reasons either, but because Temporal is indeed an excellent fit for automating infrastructure management. This is actually one of the popular Temporal use cases. Operations on cloud resources can take time, sometimes a fairly long time. A dependency service might return various retriable and non-retriable errors. Retries usually require backoff. Failures require compensating actions. In general, operations in a Control Plane like that walk and quack like Workflows. We could take the “duct tape” route, writing code with a bunch of timers and queues. We could use DSL for defining Workflows. But Temporal exists as a product in large part because these two approaches are much less developer friendly than Workflows As Code.

Reconstructing from logs what happened during the execution of a long process with a bunch of sequential and nested steps is not a pleasant experience. This is where Temporal also shines. Calls to various services are expressed as Activities with default or custom Retry Policies. Execution of a complex sequence of operations can be structured into a hierarchy of Child Workflows. Each Workflow has a complete history of its execution retained, with all inputs and outputs, timestamps, and errors automatically captured.

I feel the architecture of the Control Plane deserves its own blog post and could be made into a conference talk. Bottom line is we have an Inception-like setup—a Control Plane Cell executes a bunch of Workflows that manage a bunch of production Cells with customer namespaces and Workflows running in a multi-tenant configuration.

Scale

One of the core characteristics of Temporal is that a Temporal Cluster can scale linearly with the database and compute resources given to it, although as with any scaling, there are practical limits. The key reason for the Cell architecture is to minimize and contain the blast radius of any issue within an individual Cell. In other words, if we run ten Cells in a region, an issue with one of them, be it a software edge case or a transient infrastructure incident or a human operator mistake, would only impact about 10 percent of customers in the region. If we were instead to run a single Cell ten times the size, any issue would potentially impact all customers in the region.

At the same time, being able to run large Cells has value. We have customers that need high throughput for a single Namespace. (A Namespace cannot span Cells today.) Large Cells are also good for absorbing spikes in traffic of individual Namespaces.

We are still learning the sweet-spot approach for balancing between scaling out and up. We’ve been doing both. We’ve invested in supporting high scale for individual Cells and Namespaces. For example, one of our customers needed to be able to increase their already pretty high traffic by ten times for a day. That’s why I referred to the team that’s been focusing on the scale-up work as the “10x” team. Last summer they hit a milestone of processing one million state transitions (the lowest-level unit of work in Temporal) per second. This required some deep investments on the intersection of distributed systems and database techniques. And we only scratched the surface.

What's Next

We had a leadership offsite in December where we discussed our plans for 2023. We settled on a number of investment areas and priorities for the year. I cannot share them because we haven’t communicated even the public part of it yet.

The biggest investment without a doubt will be into people. In my opinion, we managed to attract 116 top-notch first employees. One candidate asked during the interview what I was most proud of at Temporal. I was caught off guard and had to think for a few seconds. I said that number one on my list was that I had helped to hire the great people we have. Fun fact—the candidate accepted our offer and quickly established themselves as an amazing engineer.

We are still learning how to work more efficiently with an organically growing geographic graph of employees. With the growth of the company, we need to not only continue to hire people who are better than us, we need to evolve how we work, plan, and collaborate. We need to always be adjusting how we do things.

This is the first time in my professional career that I can truly contribute to shaping the company, its products, business, and culture. It’s been an amazing and pure experience. Quite a drug, to be honest. No surprise, I continue to be all in on Temporal, just as I was when I joined. Even more so now, as things got so much more real. Ideas turn into products and features, hires form highly-functioning teams, opinions and thoughts become how we roll. And we are still only getting started.

If you would like to read other writings from Sergey on his earlier time with Temporal, check out his previous blog updates, "Why I joined Temporal" and "How It's Going".